Reactive

beyond hype

Introduction & Plan

1. Intro

2. Methodology

3. Results

Reactive?

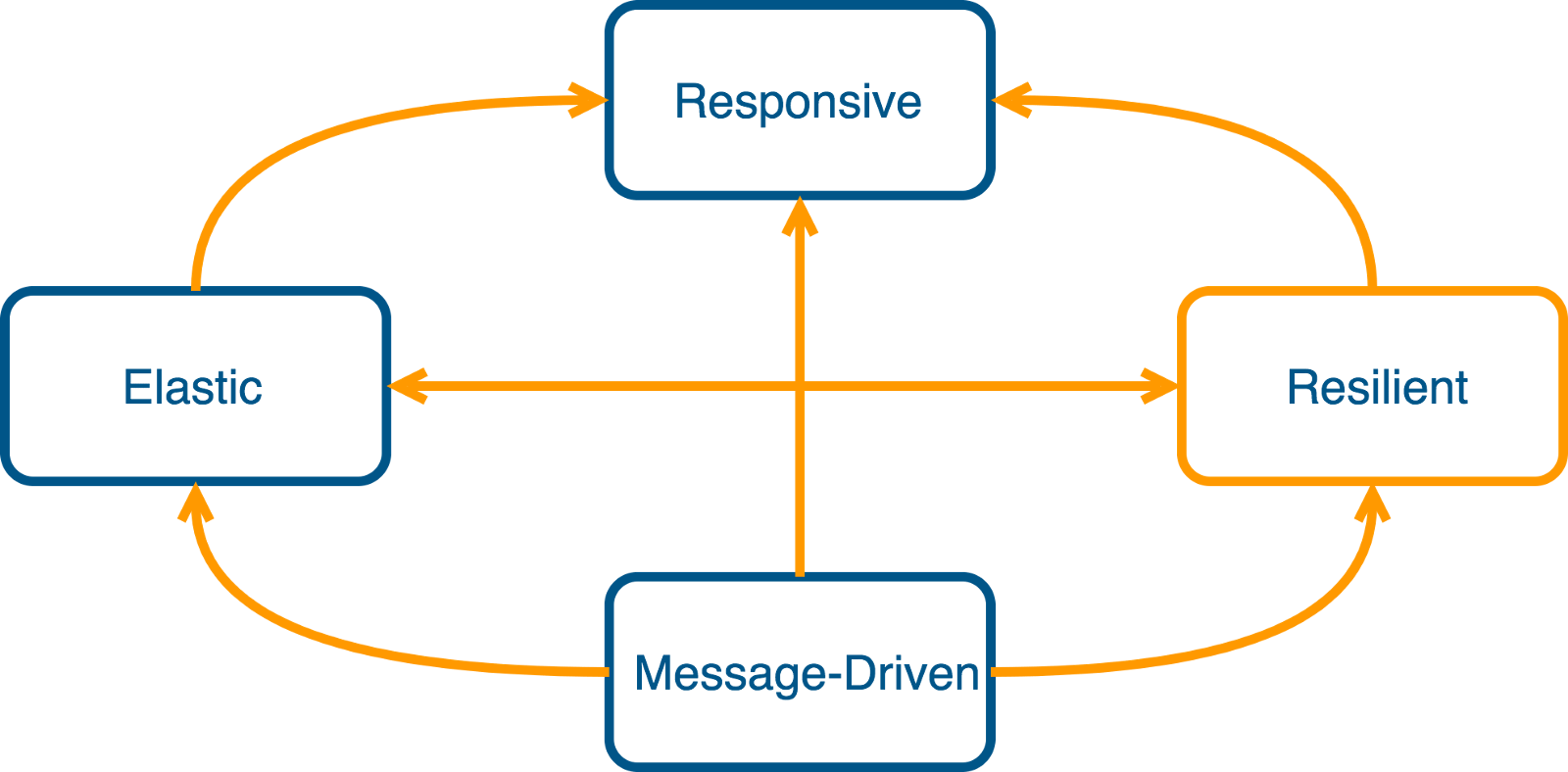

The Reactive Manifesto

Confusion

Reactive

React to Events

React to Load

React to Failure

React to Users

Responsiveness

Reactive Programming

Async / Non-blocking communication

Why async / non blocking is important

Thread per request

Async

vs

Non-blocking

ssize_t recv(

int sockfd,

void *buf,

size_t len,

int flags

);EAGAIN or EWOULDBLOCK

Non-blocking

Slick 3

val f: Future[Unit] = db.run(

bids += AuctionBid(...) // DBIO

)Multiple ops

(

for {

_ <- bids += AuctionBid(...)

...

_ <- userTransactions += Transaction(...)

} yield ()

).transactionally

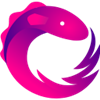

val f: Future[Unit] = db.run(a)Cassandra

val futureBid: Future[Unit] = (

for {

_ <- verifyBidAmountIsHighest(

session.executeAsync(

bidsQuery(auctionId)

).asScala

)

_ <- session.executeAsync(insertBidQuery)

} yield ()

)

Netty & Multiplexing

All called reactive

...I personally feel that’s Rust’s story on the server is going to be very firmly rooted in the “async world”. This may not be for the precise reason you’d imagine though! It’s generally accepted that best-in-class performance when you’re doing lots of I/O necessitates the usage of asynchronous I/O, which I think is critical to Rust’s use case in servers.

So, what is blocking you from using Rust on the server today?

Cost of reactive approach

Complexity

Async is hard (concurrency)

timingF(1, "create_auction)") {

for {

session <- sessionFuture

_ <- session.executeAsync(query).asScala

} yield auctionId.toString

}

def timingF[T](label: String)(f: => Future[T])

(implicit ec: ExecutionContext): Future[T] = {

val timer = cassandraQueryLatencies

.labels(label)

.startTimer()

f.onComplete { _ =>

val time = timer.observeDuration()

log.info(s"Query [${label}] took ${time}")

}

f

}

java.lang.NullPointerException was thrown.

java.lang.NullPointerException

at com.datastax.driver.core.querybuilder.BuiltStatement.maybeRebuildCache(BuiltStatement.java:166)

at com.datastax.driver.core.querybuilder.BuiltStatement.getValues(BuiltStatement.java:264)

at com.datastax.driver.core.SessionManager.makeRequestMessage(SessionManager.java:558)

at com.datastax.driver.core.SessionManager.executeAsync(SessionManager.java:131)

at com.virtuslab.auctionhouse.primaryasync.AuctionServiceImpl.$anonfun$createAuction$2(AuctionService.scala:115)

at scala.concurrent.Future.$anonfun$flatMap$1(Future.scala:304)

at scala.concurrent.impl.Promise.$anonfun$transformWith$1(Promise.scala:37)

at scala.concurrent.impl.CallbackRunnable.run(Promise.scala:60)

at scala.concurrent.impl.ExecutionContextImpl$AdaptedForkJoinTask.exec(ExecutionContextImpl.scala:140)

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1056)

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1692)

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:157)

def timingF[T](label: String)(f: => Future[T])

(implicit ec: ExecutionContext): Future[T] = {

val timer = cassandraQueryLatencies

.labels(label)

.startTimer()

f.onComplete { _ =>

val time = timer.observeDuration()

log.info(s"Query [${label}] took ${time}")

}

f

}

Invalid amount of bind variables

com.datastax.driver.core.exceptions.InvalidQueryException: Invalid amount of bind variables

at com.datastax.driver.core.Responses$Error.asException(Responses.java:147)

at com.datastax.driver.core.DefaultResultSetFuture.onSet(DefaultResultSetFuture.java:179)

at com.datastax.driver.core.RequestHandler.setFinalResult(RequestHandler.java:198)

at com.datastax.driver.core.RequestHandler.access$2600(RequestHandler.java:50)

at com.datastax.driver.core.RequestHandler$SpeculativeExecution.setFinalResult(RequestHandler.java:852)

at com.datastax.driver.core.RequestHandler$SpeculativeExecution.onSet(RequestHandler.java:686)

at com.datastax.driver.core.Connection$Dispatcher.channelRead0(Connection.java:1089)

at com.datastax.driver.core.Connection$Dispatcher.channelRead0(Connection.java:1012)

at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:105)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:356)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:342)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:335)

at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:287)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:356)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:342)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:335)

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:102)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:356)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:342)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:335)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:312)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:286)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:356)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:342)

...

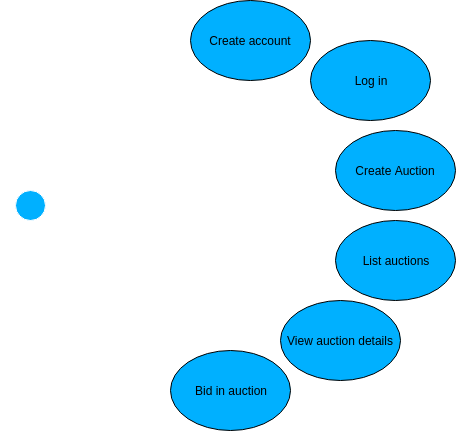

Experiment

Compare reactive and classical stack

- efficiency

- scalability

- development effort

How we wanted to do that?

- Pick 2 stacks - reactive and sync

- prepare 2 implementations of the same set of cases

- test them!!!

What to wanted to test?

we wanted to cover typical user stories in web application

So, we could create set of dummy services to test... or simple web app...

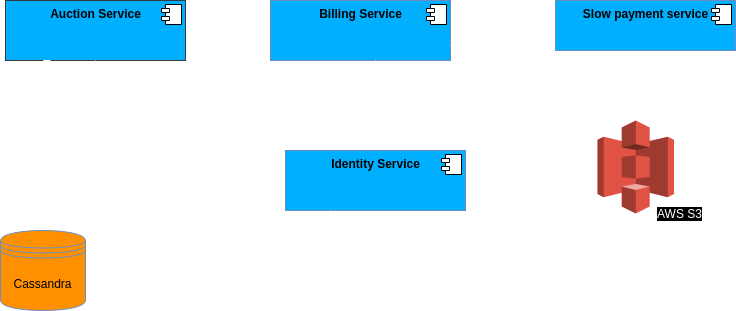

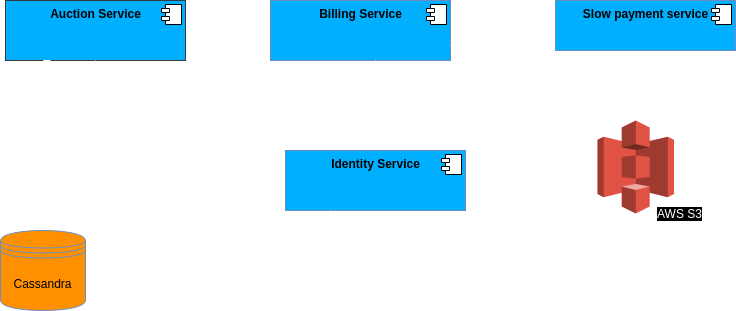

Domain, applications and infrastructure

AuctionHouse App

Architecture

Architecture

Sync:

- Scalatra (servlets)

- AWS S3 blocking API

- Sync Cassandra DataStax driver

Reactive:

- Akka Http

- Alpakka

- Async Cassandra DataStax driver

Infrastructure

Testing

Create auction scenario:

- Create account

- Log in

- Create auction

Bid in auction scenario:

- Create account

- Log in

- List auctions

- view auction

- bid

- pay

Simulation types:

- heavisideUsers

- rampUsers

- atOnceUsers

Results

Scalatra

- old good servlets

- thread per request model

- blocking IO

- Jetty servlet container

Different kinds of beasts

Different kinds of beasts

Akka HTTP

- uses actor system and async messaging between actors to spread work onto all cores

- asynchronous IO

- optimized for persistent connections and streaming

$ ab -c 50 -n 3000 'http://akka-http.local/_status'

Concurrency Level: 50 Time taken for tests: 2.801 seconds Complete requests: 3000 Failed requests: 0 Total transferred: 642000 bytes Requests per second: 1071.21 [#/sec] (mean) Time per request: 46.676 [ms] (mean) Time per request: 0.934 [ms] (mean, across all concurrent requests) Transfer rate: 223.87 [Kbytes/sec] received

$ ab -k -c 50 -n 3000 'http://akka-http.local/_status'

Concurrency Level: 50 Time taken for tests: 0.595 seconds Complete requests: 3000 Failed requests: 0 Keep-Alive requests: 3000 Total transferred: 657000 bytes Requests per second: 5039.82 [#/sec] (mean) Time per request: 9.921 [ms] (mean) Time per request: 0.198 [ms] (mean, across all concurrent requests) Transfer rate: 1077.85 [Kbytes/sec] received

$ ab -c 50 -n 3000 'http://scalatra.local/_status'

Concurrency Level: 50 Time taken for tests: 1.620 seconds Complete requests: 3000 Failed requests: 0 Total transferred: 642000 bytes Requests per second: 1851.71 [#/sec] (mean) Time per request: 27.002 [ms] (mean) Time per request: 0.540 [ms] (mean, across all concurrent requests) Transfer rate: 386.98 [Kbytes/sec] received

$ ab -k -c 50 -n 3000 'http://scalatra.local/_status'

Concurrency Level: 50 Time taken for tests: 0.782 seconds Complete requests: 3000 Failed requests: 0 Keep-Alive requests: 3000 Total transferred: 714000 bytes Requests per second: 3835.80 [#/sec] (mean) Time per request: 13.035 [ms] (mean) Time per request: 0.261 [ms] (mean, across all concurrent requests) Transfer rate: 891.52 [Kbytes/sec] received

Identity is screwed

and everyone knows about it

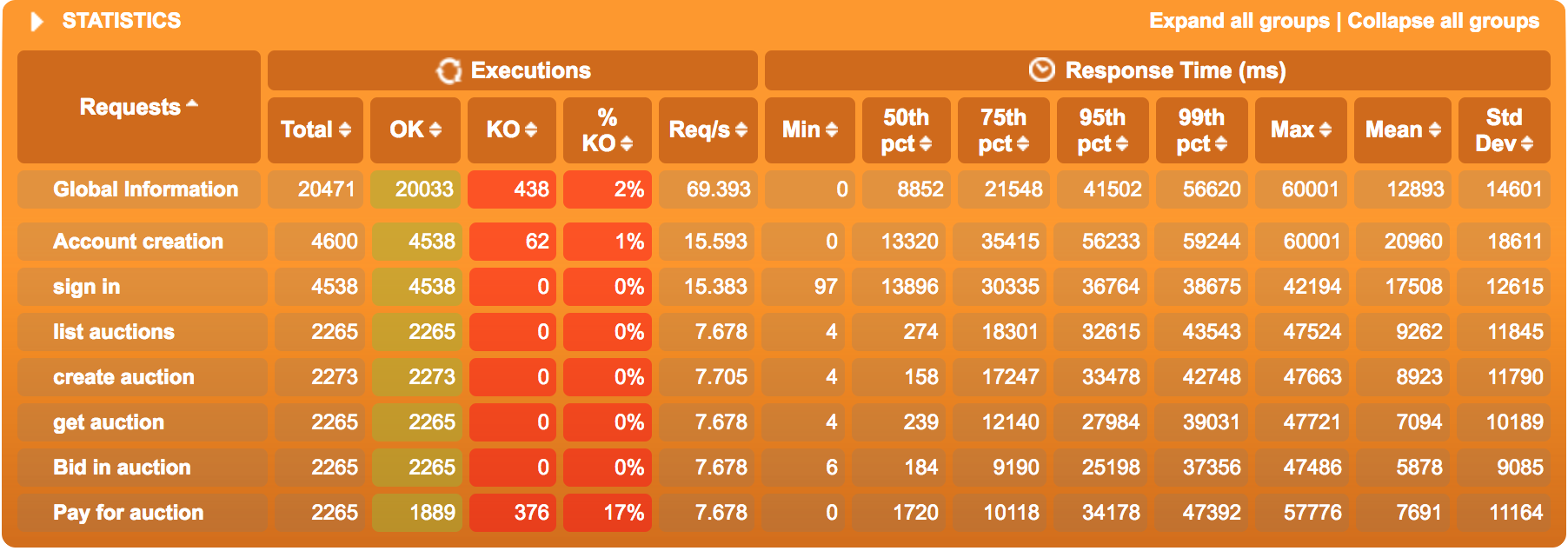

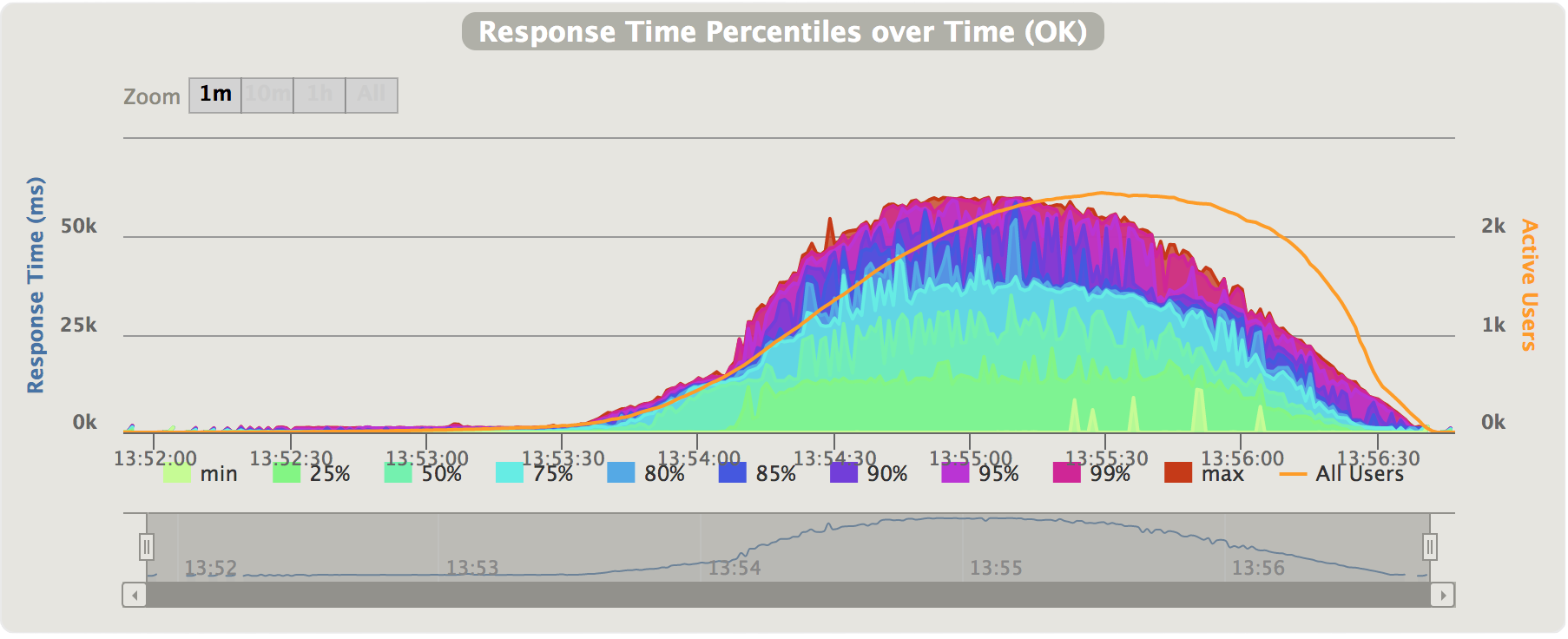

Sync stack

Heaviside load scenario, 2300 users, 300 seconds

Reactive Microservices: The Good Parts

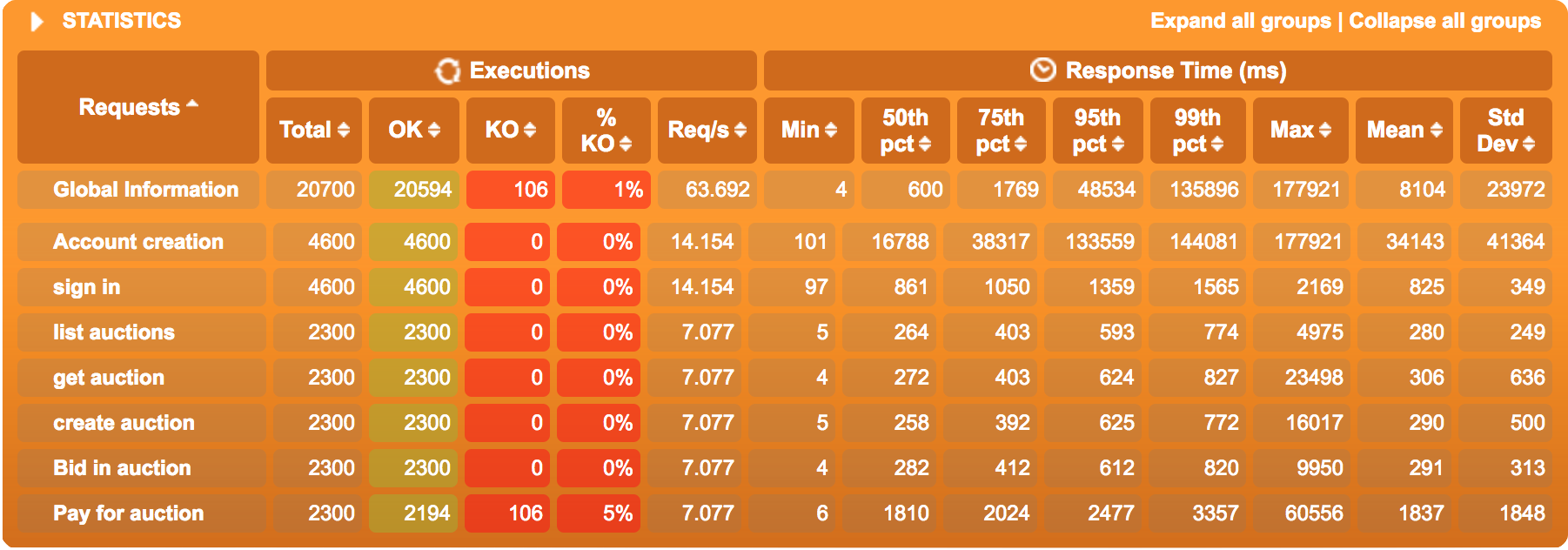

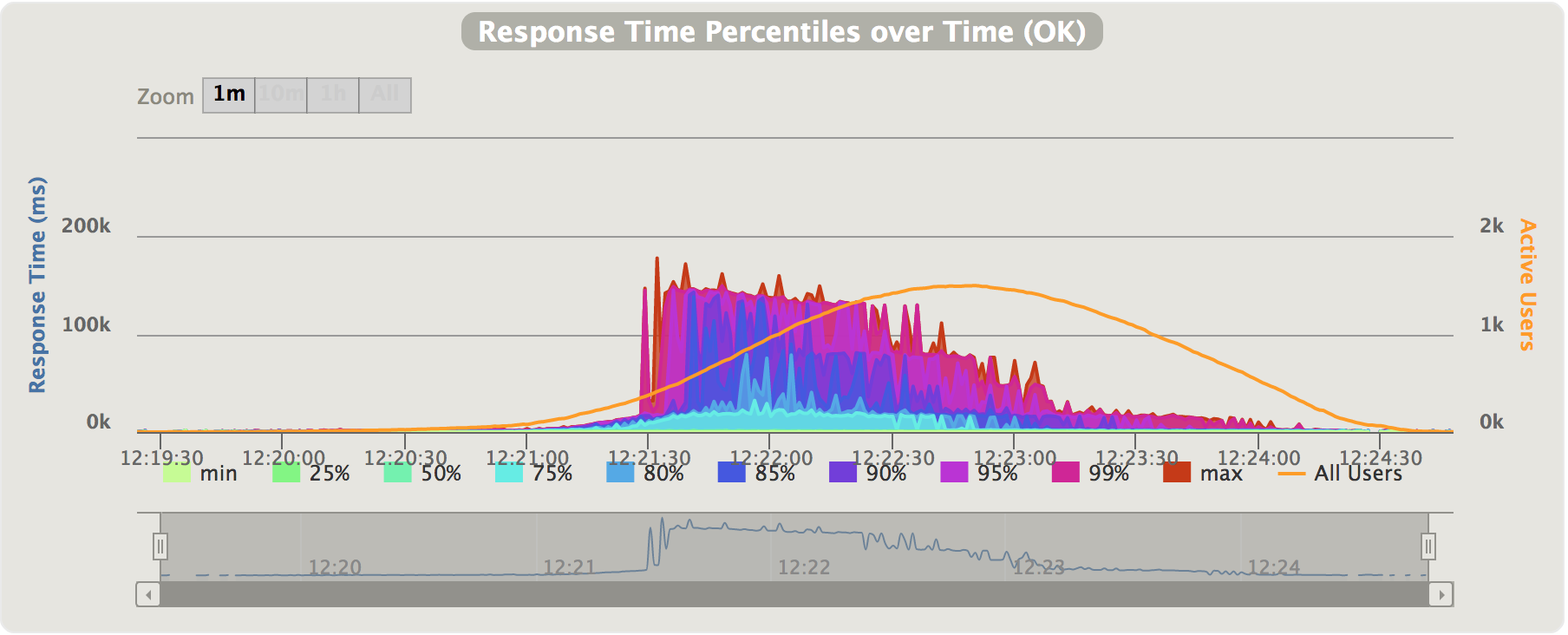

Reactive stack

Heaviside load scenario, 2300 users, 300 seconds

Reactive Microservices: The Good Parts

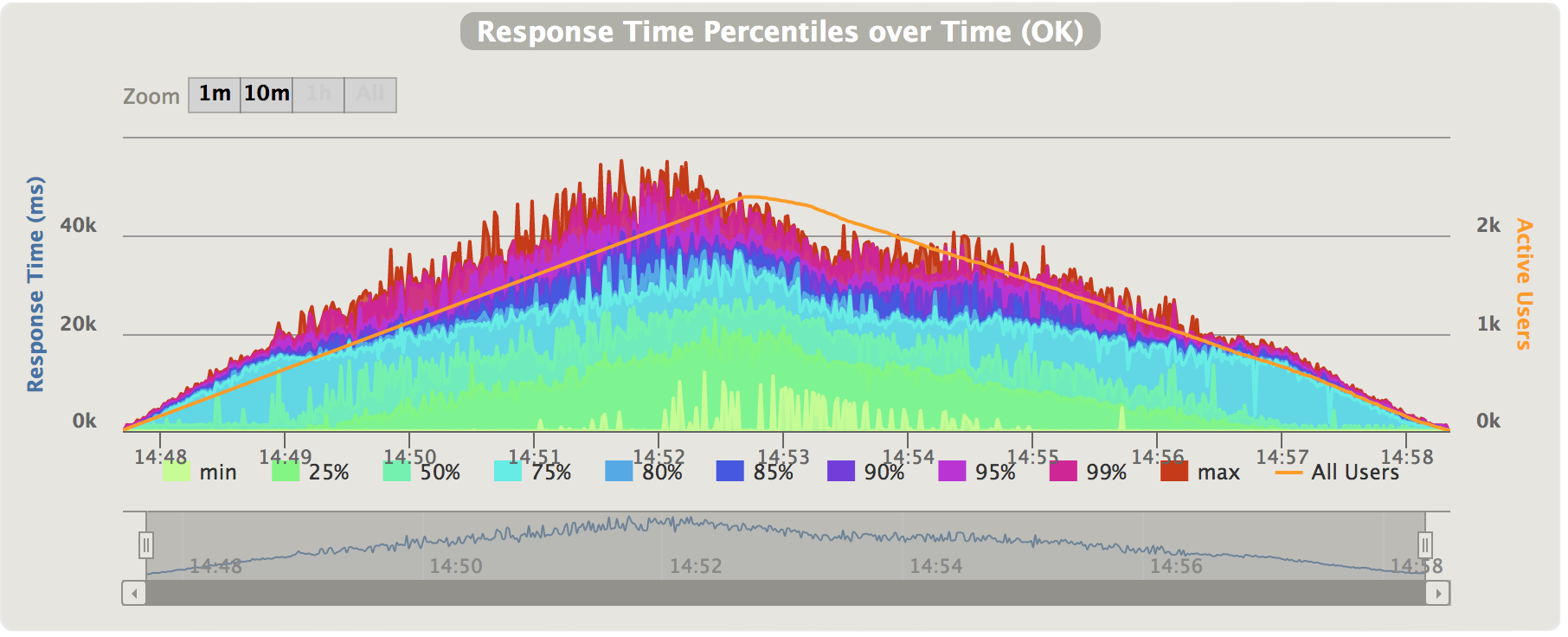

Async

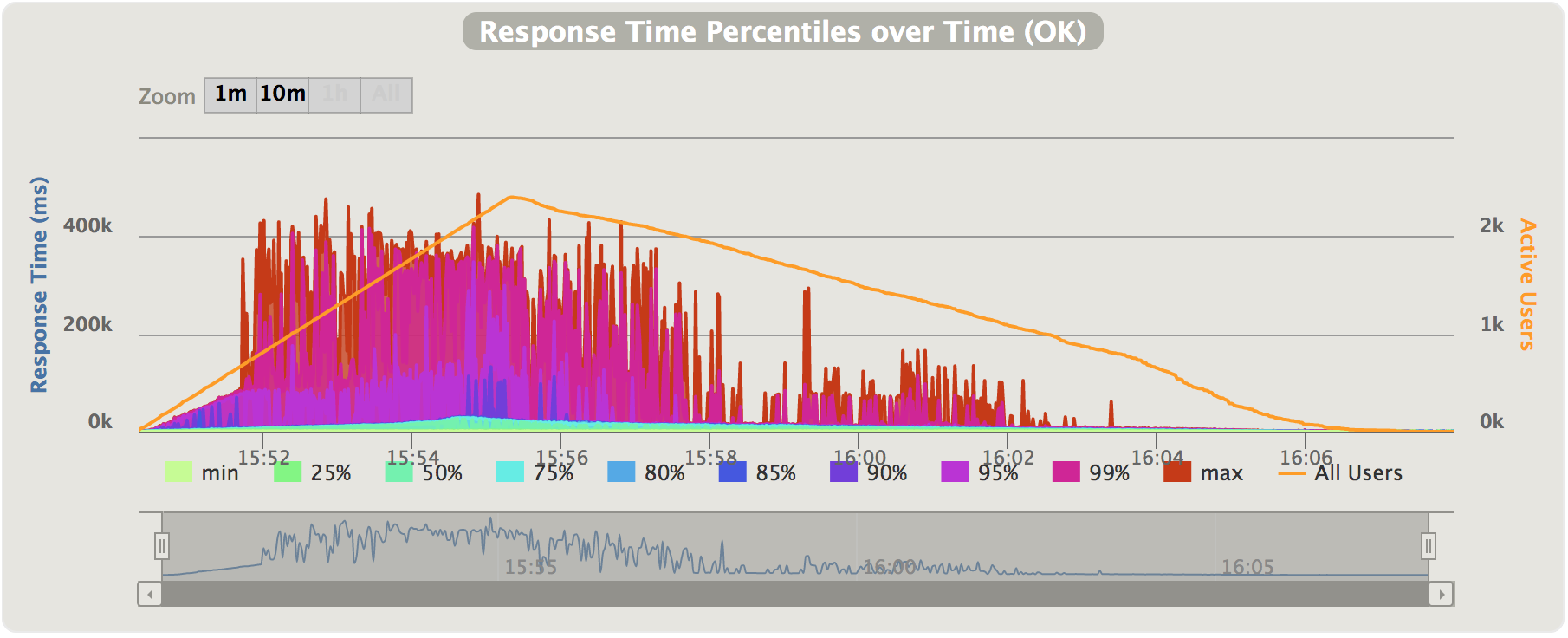

Sync

Reactive Microservices: The Bad Parts

Reactive stack

- Configuration tuning:

- Timeouts

- Queue sizes

- Learning curve

Sync stack

- Just worked.

Reactive Microservices: The Hard Parts

Sync

Async

Final remarks

- Decide if you need to go reactive, if so:

- Measure performance, perform load testing

- Your milage WILL vary so learn how the guts work

Thank you for

listening

http://github.com/VirtusLab/ReactSphere-reactive-beyond-hype

Reactive - beyond hype (ReactSphere)

By Pawel Dolega

Reactive - beyond hype (ReactSphere)

- 2,240